Neural Networks, Machine Learning, AI, and You!

I had originally given this talk at my workplace in October of 2023. I was prompted to put something together in response to all the hype I was seeing in the industry and around the financial services markets. I had two objectives when I started researching this presentation:

- Demystifying generative AI, exactly what it was, how it worked, and what it could do

- Identify opportunities to use generative AI within our business and industry

I’m going to break this presentation over three posts as there’s a lot of content to consume here. Part 2 is here, and Part 3 is here.

Here’s a breakdown of the slides and content! It’s worth noting a lot of this content was based on material I had found on Jay Alammar’s site and youtube channel - you can check it out at https://jalammar.github.io/

Also worth noting that there’s quite a bit of stuff I’d update on these slides now that I’m nearly a year into working with generative AI, but as a primer it still works pretty well.

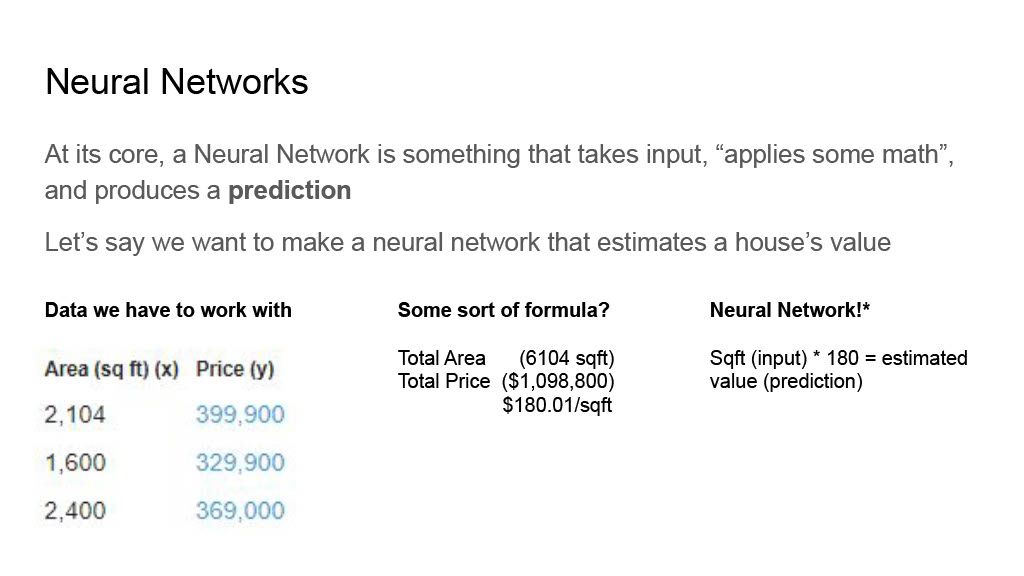

A neural network consists of three components; an input layer, one or more “hidden layers”, and an output / prediction layer. We can make a simple example here based on house prices (an example taken from Jay’s blog) - given the square footage (input layer), apply some calculations (hidden layer), and make a prediction as to what the house’s list price will be.

A neural network consists of three components; an input layer, one or more “hidden layers”, and an output / prediction layer. We can make a simple example here based on house prices (an example taken from Jay’s blog) - given the square footage (input layer), apply some calculations (hidden layer), and make a prediction as to what the house’s list price will be.

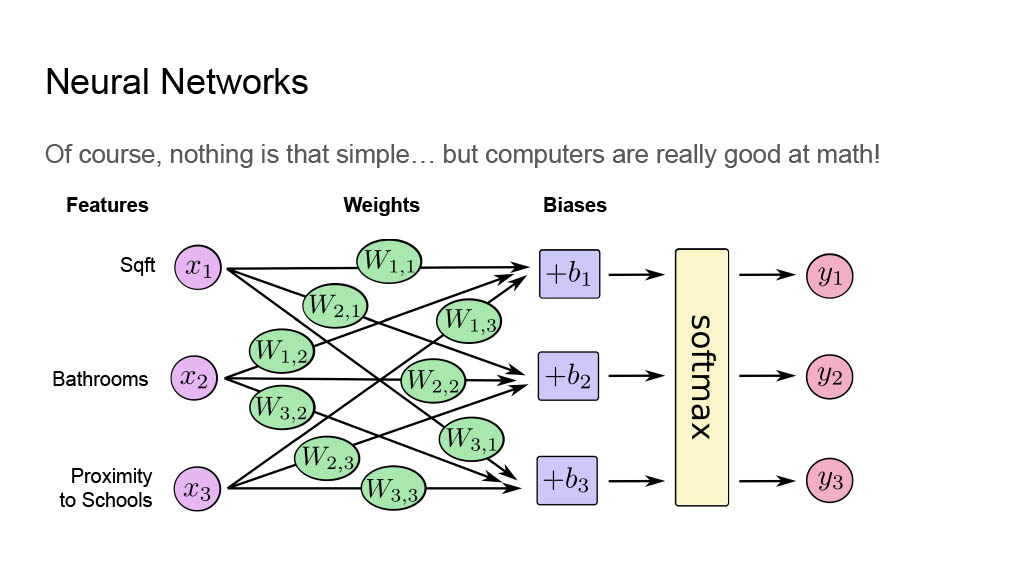

Of course, in practice a neural network is much more complicated, both in the number of inputs it takes in and the calculations it applies to them. The list price of a house isn’t based on square footage alone; the number of bedrooms, proximity to other services like schools and grocery stores, overall neighbourhood, and many other factors are considered. We (subconsciously) apply weights (how important an input is in relation to other inputs) and biases (how important that individual input is) to those inputs to help us make a prediction.

Of course, in practice a neural network is much more complicated, both in the number of inputs it takes in and the calculations it applies to them. The list price of a house isn’t based on square footage alone; the number of bedrooms, proximity to other services like schools and grocery stores, overall neighbourhood, and many other factors are considered. We (subconsciously) apply weights (how important an input is in relation to other inputs) and biases (how important that individual input is) to those inputs to help us make a prediction.

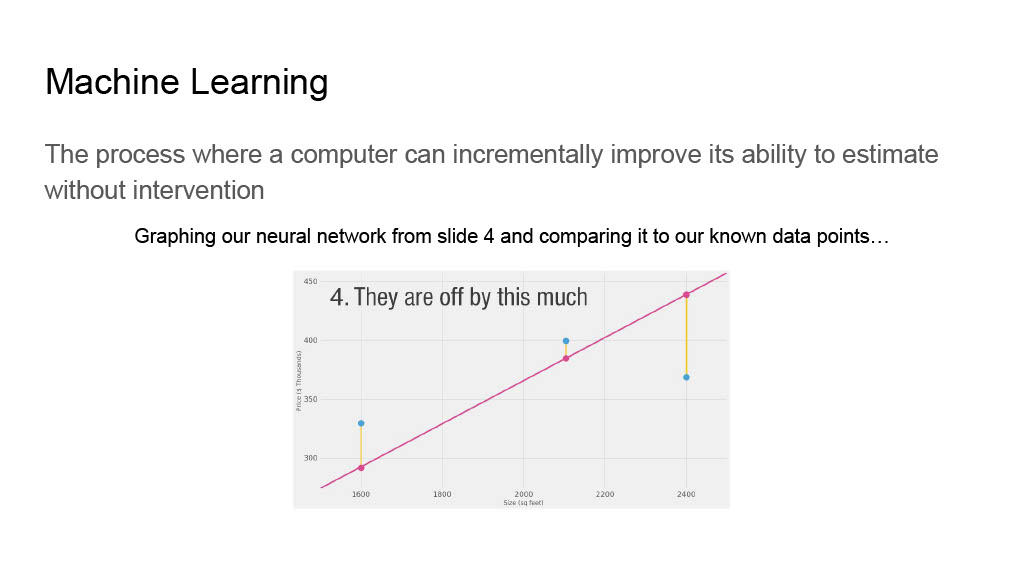

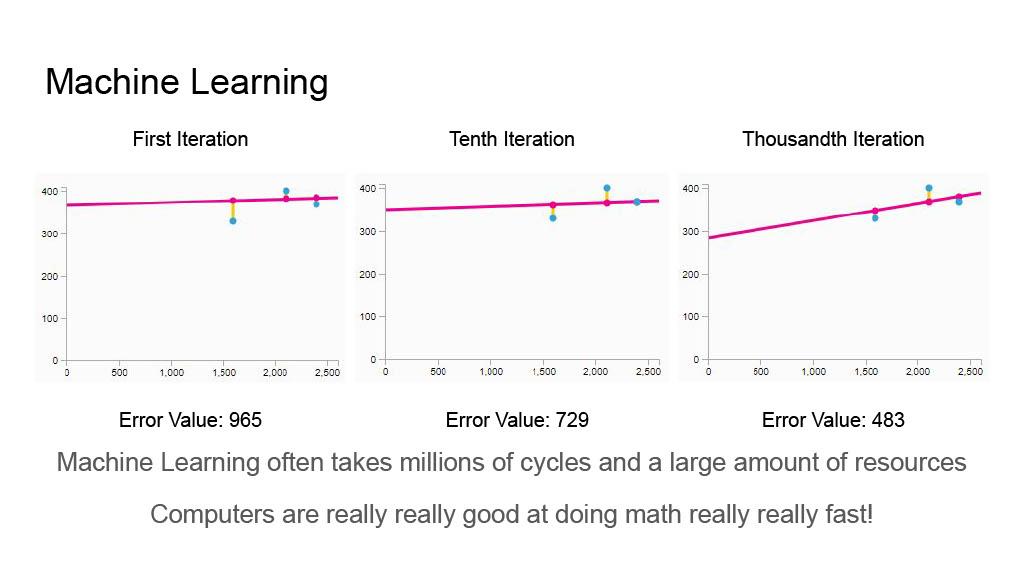

Normally for humans to figure this out we need to do complicated pen & paper math with calculus and linear equations and the like. Because all of this is just math, and computers are really good at doing math quickly, we’re able to use machine learning to derive what these weights and biases should be through iterative testing.

Normally for humans to figure this out we need to do complicated pen & paper math with calculus and linear equations and the like. Because all of this is just math, and computers are really good at doing math quickly, we’re able to use machine learning to derive what these weights and biases should be through iterative testing.

Using computer programming we can run a test against our current weights and biases, see how far off of our known results we are (the error rate), and tell the computer to make adjustments to attempt to get closer. This entire process is referred to as training a model.

Using computer programming we can run a test against our current weights and biases, see how far off of our known results we are (the error rate), and tell the computer to make adjustments to attempt to get closer. This entire process is referred to as training a model.

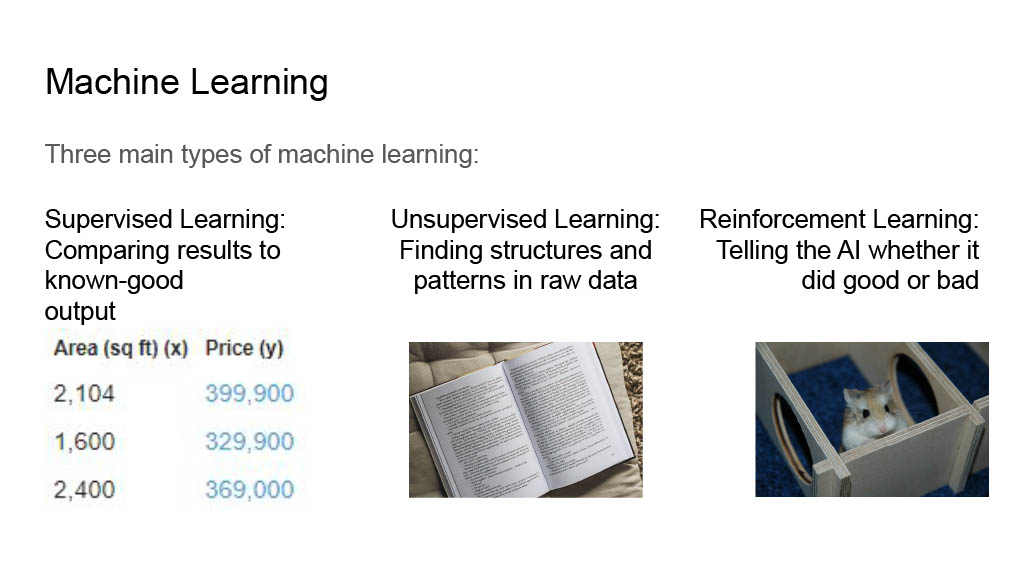

When it comes to training, there’s three main approaches:

When it comes to training, there’s three main approaches:

- Supervised learning - using known good data (and a decent amount of it) to tell how correct the predictions are

- Unsupervised learning - feeding input into a neural network and seeing what structures and patterns it derives on its own. You can use this to predict the classification of future inputs

- Reinforcement learning - informing the training process after each prediction if it did a good or bad thing. This doesn’t have to involve human intervention; playing a video game gives automated feedback as to whether you’re in a better or worse spot, for example

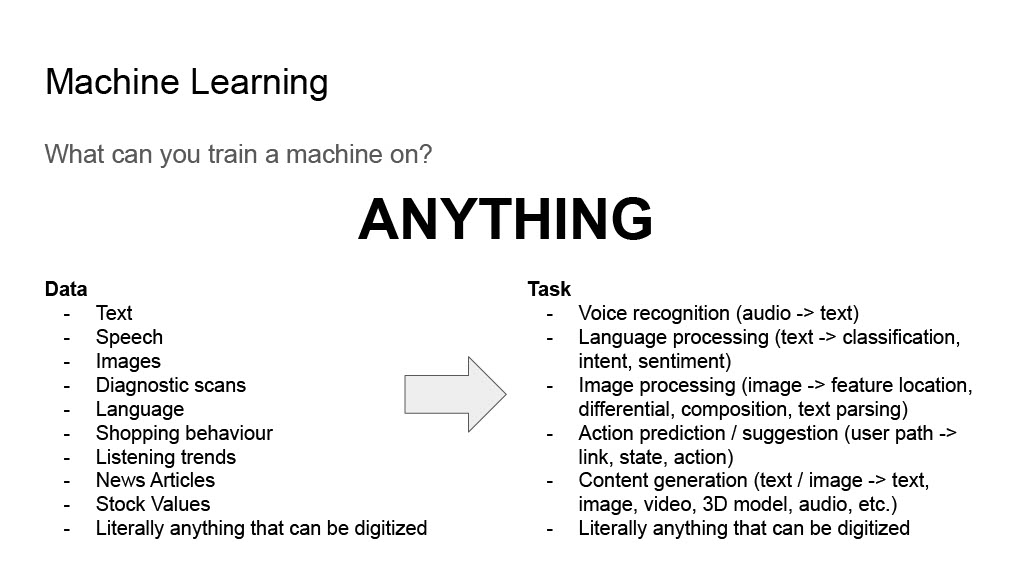

One of the coolest things about this new way of applying artificial intelligence is that our input layer can be basically any sort of digital information, and our output layer can be any sort of prediction that can be expressed digitally. This is why we can create images from text descriptions, or extract MIDIs from MP3s, or make videos based on soundtracks

One of the coolest things about this new way of applying artificial intelligence is that our input layer can be basically any sort of digital information, and our output layer can be any sort of prediction that can be expressed digitally. This is why we can create images from text descriptions, or extract MIDIs from MP3s, or make videos based on soundtracks