Microsoft Ignite 2025

I’m attending MS Ignite virtually this year, mostly in the context of a generative AI developer on behalf of my company, LogiSense. I thought I’d share a few of my notes from various sessions here in case others were interested.

Keynote & Day 1

- Windows 365 Link, basically a steam link/chromebook for windows. Little apple-TV looking device that just loads cloud-based workstations. Costs $350, but a cloud workstation is $30-$70/month

- Lots of focus on security updates, seems like they’ve got a response to the CrowdStrike thing that happened earlier this year

- Big focus on copilot (of course). Lots of work done around AI tooling in Azure, making it even easier to spin up your own copilot agents and include your data. Agent definitions can be created as reusable templates and shared with others (both third-party and private)

- A bunch of new tooling around copilot analytics to measure the ROI on AI investments

- Fabric DB - SQL Server + MS Fabric. Basically allows you to declare and run an operational database that is auto synced with your data lake for AI uses

- Apparently MS has a huge step up in the quantum computing space too… combining that with some of their other partners (i.e. Protein Design, who won a nobel prize for their use of AI), it’s kind of scary how quickly things are advancing in some of these fields

- Copilot for Excel, which is fabled to do for data analysts and business workers what Github Copilot has done for developers. Can run python in-app to provide accurate data analysis

- MS case-studies on real-world business impacts across 200 different companies - https://blogs.microsoft.com/blog/2024/11/12/how-real-world-businesses-are-transforming-with-ai/

- Most of the sessions I attended yesterday were basically partner-backed advertisements about how easy it is to use Azure + AI, complete with concrete examples. MS focused quite a bit on measurable business impact, I think in part to dispel recent reports that Copilot isn’t doing as well for business as people think it is

Day 2

- My favourite session so far was seeing how Toyota is using LLMs and Copilot Agents. As per usual Toyota has amazing design sense that could be replicated across other industries (they’re the whole reason we have Kanban!)

- They started with department-specific copilots that had access to all the department’s information

- They deployed the copilot into the department, but had experts watch its output, and corrected the output in-chat whenever it was wrong. The correction was captured and stored into the knowledgebase along side the documentation it previously indexed

- Once the copilot wasn’t being corrected anymore because it had received enough corrections that its accuracy improved, it was put into a larger system

- Toyota has a multi-agent system named after one of their design practices called O-Beya, which means “big room”. Traditionally whenever Toyota wanted to design something new they would get all their top minds in the same room, poking away collaboratively. Now with department specific copilots, they can effectively do the same thing - throw a query or design goal into a chat room, and each of their department copilots iterate on the idea with each other. The battery copilot may point out size and weight requirements while the car frame copilot suggests the optimal structure for the frame of the vehicle, and the exterior copilot recommends the best wind-resistance design given that frame and weight

- I’d love to take this approach for some of the knowledge-based AI apps we’ve created internally!

- Semantic Cache - the idea that if a user’s query is similar, you can respond from a cached reply. I.e. “how do I make a new service” and “what’s the best way to make a new service” shouldn’t require two separate round trips to the LLM. Can use vectors the same way we’re using them for semantic search to find similar queries and return cached replies

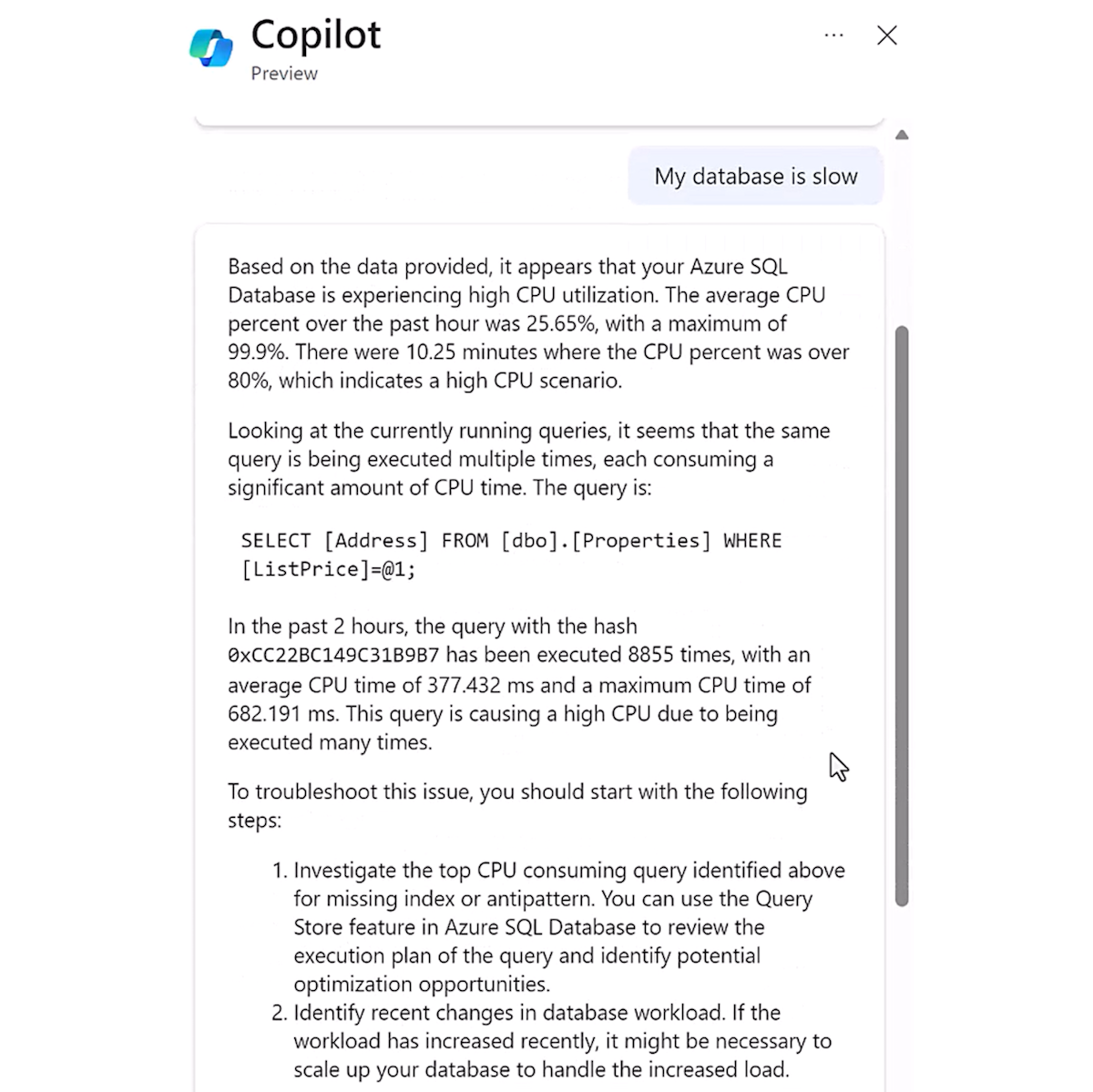

- Copilot for SQL was pretty neat. Basically if you have an Azure-hosted SQL instance you can type in “why is my database slow” and it’ll diagnose the current state, the cause of the current state, and give a recommendation on how to fix it. The demo showed that the SQL instance had high CPU, the high CPU was caused by a specific query, and the query could be optimized by adding an index (and it generated the specific index code)

Image from https://learn.microsoft.com/en-us/azure/azure-sql/copilot/copilot-azure-sql-overview?view=azuresql

- They’ve done a lot of work within Azure to enable copilot scenarios. There was a demo that was a full end-to-end

- migrate on-prem sql server to azure

- create a vector index from an existing table (and that index is auto-updated as the table updates)

- create a copilot from the vector index

- create a web interface for the copilot

- deploy web interface to web application

all without writing a single line of code, just done through interface clicks and natural language definitions

- Lots of work in the fine-tuning space, including integrations with 3rd party tools like Weights & Biases to enable better training results and monitoring

- Seeing all the Azure tooling around AI makes me jealous, we don’t have much (if any) of it in AWS. I’m actually working on building out a rudimentary version of a tool around prompt management & testing that is just a thing that’s available in Azure with a few clicks.

Day 3

Most of the sessions I attended in Day 3 were about fine-tuning. There was a good presentation by Bayer Crop Science on how they took one of the small language models and fine-tuned it so that it knew agricultural terms better, as well as combining it with a local RAG pattern on their actual products and manuals so that they could have on-device in-field LLM driven conversations with producers about their products.

There was another presentation by Cognizant which is a consulting company, they basically abstracted their entire lead generation pipeline into an orchestrated network of AI Agents. They did a live demo with a random attendee’s company where they typed in the company name, and their network of AI agents:

- identified the business

- identified use cases where AI may be of use to them

- identified the highest ROI use case to pursue

- generated a simulated data set to be used as a template for the client to fill in with their own data

- generated a network of AI agents around supporting the use case

- deployed the AI network to a web application

Basically abstracting their entire consulting flow to a system of agents. One of their consultants can walk into a company on the first day and say “here’s the thing we made, if we plug in your data it’ll be even better”. It was crazy good.

They also have a similar situation for their intranet, where there was an AI Agent for each “department”, so when someone asked a question each agent had a chance to respond to it if it was relevant. As an example they asked “my significant other just passed away, what should I do?” and the Legal and HR bots responded with advice based on their internal documentation

Another similar presentation by Unilever on customer-facing orchestration, where their chat app is a full screen app that handles product directions, suggestions, and checkout with three separate agents, all based on the intent of the query. The checkout agent has access to the cart API so it can add/remove things to the user’s cart based on the conversation. The example they gave was asking for a recipe using some specific ingredients and flavours, the bot responding with one, the user asking to scale it to 20 servings, and then asking to purchase the ingredients, where the checkout bot then added the ingredients from the recipe at the quantities needed to the cart

As for the actual fine-tuning process, of course Azure has all the tools to do it in-cloud :) Lots of demos of their tooling, which have come a long way since last year’s Ignite.