Semantic Caching - Caching based on Intent

It’s no secret - calls to generative AI APIs are expensive and slow. It seemed like a crazy concept when it was first introduced - having to pay per API call? For an API that takes seconds to return? Who does that? Now that it’s established as normal, strategies are being developed to attempt to reduce cost and improve response times when it comes to generative AI APIs, and one of the most promising is Semantic Caching.

Caching as a software development concept isn’t anything new. The idea is being able to intercept expensive calls that you’ve served before by pulling the previous result from a faster, cheaper store. Caching is used against expensive operational databases, to reduce queries in reporting environments, to avoid expensive API calls over slow networks - it’s even used extensively by everyday consumers and their browsers, often without their knowledge.

However, semantic caching is the new concept. The idea is that we can intercept similar queries and avoid expensive API calls to generative AI language models by interpreting the intent of the query and searching our cache against that. Using the same vector and embedding technology we use for things like document search, we can intercept any calls to a generative AI that are similar enough to past queries, saving the end user’s time and our company money.

Search and Deploy

To do this, we need to have a service that can do that vector search for us. For the demonstration I’m going to be using OpenSearch as it’s freely available as a docker container, but there’s any number of services that can support vector search. OpenSearch is also used by AWS as their default knowledgebase search engine, so we can apply some of the same techniques we may already be using in our existing applications.

I actually had a bit of trouble setting this up out of the box with a single node, and instead fell back on a docker compose script that got two OpenSearch nodes up and running (as well as the dashboard container to use a Web UI to interface with them). You can see my example docker compose file here.

Once you install and run the docker containers, you’ll need to set up an Index. An index is kind of like a database index, in that it has columns of queryable data within it (each with its own type), and each “row” is a reference to a document within the index. The analogy isn’t a perfect one, but it’ll be good enough for our purposes today :) To create the image, you can make a POST request against your OpenSearch instance:

PUT http://localhost:9200/semantic-cache-index

{

"settings":

{

"index":

{

"knn": true

}

},

"mappings":

{

"properties":

{

"query_Vector":

{

"type": "knn_vector",

"dimension": 1536

},

"result":

{

"type": "text"

}

}

}

}

The important part of this query is that we’re establishing that this is a “kNN” index (allowing us to do vector search operations) and the query_Vector field is a knn_vector type.

The Application

Once you have all that set up, we can switch over to the code. The general algorithm will be as follows:

- Receive a query

- Embed the query

- Search the semantic cache for similar cached entries that match the query

- if a cached entry is found with an acceptable match percentage, use that result

- else

- query the generative AI for the answer to the query

- cache the result in the semantic cache, using the embedded query as the key

- return the result to the user

Generating Embeddings with Semantic Kernel

Using our favourite C# tool for generative AI, Semantic Kernel, we can set up a text embedding service using our Open AI key.

IKernelBuilder kernelBuilder = Kernel.CreateBuilder();

kernelBuilder.AddOpenAITextEmbeddingGeneration(

modelId: "text-embedding-3-small",

apiKey: key,

);

Kernel kernel = kernelBuilder.Build();

var embeddingService = kernel.GetRequiredService<ITextEmbeddingGenerationService>();

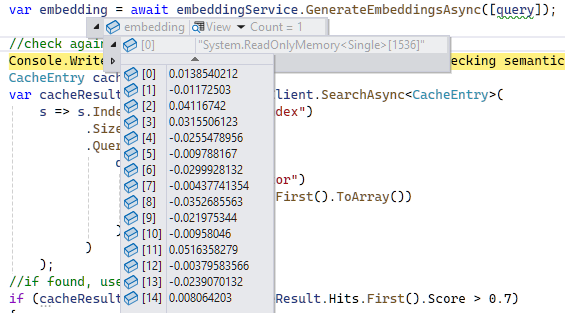

Once we have this configured, it’s a simple matter of calling into GenerateEmbeddings

var embedding = await embeddingService.GenerateEmbeddingsAsync([query]);

GenerateEmbeddings expects a list of strings - this allows you to specify multiple documents to embed in one API call as opposed to making one call for each. The return is an IList of type ReadOnlyMemory<float>, an point array of numbers that represents the vectorized version of the query.

Searching the Semantic Cache

Now that we’ve got our vector, we can do a kNN search against our semantic cache in OpenSearch.

var openSearchClient = new OpenSearchClient(new Uri("http://localhost:9200"));

CacheEntry cacheEntry = null;

var cacheResult = await openSearchClient.SearchAsync<CacheEntry>(

s => s.Index("semantic-cache-index")

.Size(1)

.Query(q =>

q.Knn(k =>

k.Field("query_Vector")

.Vector(embedding.First().ToArray())

.K(1)

)

)

);

if (cacheResult.Hits.Any() && cacheResult.Hits.First().Score > 0.7)

{

cacheEntry = cacheResult.Hits.First().Source;

}

Casing is important here - make sure your index and field names match exactly what you created above. You’ll notice

query_Vectoris capitalized weird here, because the OpenSearch.Client .NET library cases the property names that way. If your casing doesn’t line up, you’ll get weird errors like"All shards failed"in the response object.

Here we’re getting a list of results that are “similar” to our vector embedding within a certain threshold (0.7, or 70%). If we find one, we use the document to return the result. Obviously you’d do some testing to tweak this threshold to meet your needs.

Adding Entries to the Cache

If we didn’t get a result, we’ll instead query ChatGPT-4o-mini for a response, and save that in our semantic cache so we can void future queries for the same request.

if (cacheEntry == null)

{

var chatHistory = new ChatHistory();

chatHistory.AddUserMessage(query);

var generatedResponse = await generationService.GetChatMessageContentAsync(chatHistory);

cacheEntry = new CacheEntry

{

Query_Vector = embedding.First().ToArray(),

Result = generatedResponse.Items.First().ToString()

};

var response = await openSearchClient.IndexAsync(cacheEntry, i => i.Index("semantic-cache-index"));

}

Example Execution

And it’s as simple as that! Here’s a test run with a bit of debugging text added.

What would you like to know?

How many people live in Toronto, Canada?

[0ms - Embedding]

[848ms - Checking semantic cache]

[1127ms - Querying language model]

[2386ms - Saving result in semantic cache]

[2468ms - Returning result]

As of the most recent estimates, Toronto, Canada, has a population of approximately 2.8 million people. For the most accurate and up-to-date information, it's always a good idea to consult the latest census data or statistics from reliable sources like Statistics Canada.

----------

What would you like to know?

What's the population of Toronto?

[0ms - Embedding]

[524ms - Checking semantic cache]

[590ms - Cache hit]

[590ms - Returning result]

As of the most recent estimates, Toronto, Canada, has a population of approximately 2.8 million people. For the most accurate and up-to-date information, it's always a good idea to consult the latest census data or statistics from reliable sources like Statistics Canada.

----------

As you can see, the first query we ran didn’t have any cached results so we asked a language model, got a response, and saved it in the cache. The second question we asked was semantically similar enough to our cached response that it was able to return a result from the cache in about 1/4th the time, and without spending any of our valuable OpenAI credits!

Next Steps

As with any caching strategy, it’s a good idea to discuss cache invalidation. After how long, and under what conditions, would you consider the information in the cache to be ‘stale’ and in need of a refresh?

The interesting thing with a semantic cache is because the queries are often informational, you can base your cache expiry on the data sources you’re pulling from. In this example case, there’d be no reason for us to update the cache until the underlying language model changed; if there was a new version of ChatGPT we switched to, or the version incremented, we’d likely want to invalidate the responses we had cached from the previous language model.

Of course, in most other circumstances you’re likely generating responses based on retrieval-augmented generation, so your cache expiry will be based on those external sources of information. If you’re authoring a documentation bot, for example, you’d want to refresh the cache as soon as you had indexed new or updated documentation. That still leaves a lot of time for the cache to be helpful, saving you precious milliseconds of compute and expensive API calls.

Another thing I’d do if I were moving this to production is write it as an implementation of ITextCompletionService, so downstream developers didn’t have to bake this cache searching and updating code into their own queries - providing it as a library that handles all the cache searching and management for you would enable other developers to take advantage of the savings without having to put a lot of extra work into it.

Happy caching!