Techniques for Prompt Engineering - Part 2

Continuing from our post the other day about prompt terminology, I’d like to focus on specific techniques that can be used to write prompts that get the results you’re looking for from Generative AIs. This is a user-friendly summary of the research paper available here, based on a presentation I’m giving at my workplace.

Zero-Shot Prompting

This is the prompting style that most people are using now, even if they’re not aware of it. It generally consists of a directive or command, with potentially a few hints as to role, style, or context. This can also include supporting information through a web search-enabled model interface or retrieval-augmented generation.

You are an award winning children's author. //role

Write a short story about a cat. //directive

The story should be in the style of a fairy tale. //style

This story will be told to five-year-olds //context

The key difference here is that we’re not giving the language model any examples with the prompt; that’s a different style, referred to as Few-Shot Prompting.

Few-Shot Prompting

In few-shot prompting we provide the language model with examples of what we expect it to return. This approach really helps guide the behaviour and output of the model.

Classify the statement as positive or negative.

"I'm happy": Positive

"I'm sad": Negative

"This is the hot dog I've ever ate!": Positive

"This burger is undercooked": Negative

"The food at this restuarant is terrible": //end of prompt

Given the examples included in the prompt, the language model will continue the pattern and apply its own “next token” logic based on its training. With the prompt above you should get Negative as a response and nothing else - compare that to a standard, verbose, hard-to-parse response that you’d normally get with zero-shot prompting.

Thought Generation

Thought generation takes the approach of asking the language model to reason through its response as opposed to just determining the next token to return (or at least in addition to it). Because language models use the entire conversation as input into determining the next token to output (at least within its own maximum context length), you’re effectively asking the language model to generate the information it needs to reply to the prompt.

By simply adding something like “Explain your answer step-by-step” to your prompt, the language model reaches into its training data to generate the facts needed to come to a more reasonable answer. This has been shown to work quite well for questions that require complex reasoning or calculation, areas where language models traditionally have issues with zero or few-shot prompts.

//with Llama 3.1 8B Instruct

> How many Eiffel towers could you stack one on top of another before you reached the upper atmosphere? Respond with the answer only.

5.3

---

> How many Eiffel towers could you stack one on top of another before you reached the upper atmosphere? Explain your answer step by step.

To estimate how many Eiffel Towers we could stack before reaching the upper atmosphere, we'll need to consider a few factors:

1. **Height of the Eiffel Tower**: The Eiffel Tower stands at an impressive 324 meters (1,063 feet) tall.

2. **Height of the upper atmosphere**: The upper atmosphere, also known as the exosphere, begins around 500-600 kilometers (310-373 miles) above the Earth's surface. Let's use 550 kilometers (342 miles) as our reference point.

3. **Stacking assumption**: We'll assume that each Eiffel Tower is perfectly stacked on top of the previous one, without any gaps or irregularities.

Now, let's do some math:

1. Convert the height of the upper atmosphere from kilometers to meters: 550 km × 1000 m/km = 550,000 meters

2. Divide the height of the upper atmosphere by the height of a single Eiffel Tower: 550,000 m ÷ 324 m = approximately 1,698

So, we could stack approximately **1,698 Eiffel Towers** on top of each other before reaching the upper atmosphere.

Self-Criticism

In a similar approach as thought generation, we can ask language models to critique their own responses and suggest improvements, and then ask the model to revise its answers based on those improvements. Again this is taking advantage of the model effectively generating its own prompt by considering the entire context when predicting the next token to generate.

> What are the top 3 benefits of remote work?

The top 3 benefits of remote work are:

1. **Increased flexibility and work-life balance**: Remote work allows...

2. **Improved productivity and efficiency**: Without the distractions...

3. **Broader talent pool and reduced turnover**: By allowing employees...

> Can you critique your answer and suggest improvements or things that might have been missed?

Here's a critique of my previous answer and some suggestions for improvement:

* My answer was a bit too generic and didn't provide any specific examples or data to support the benefits.

* I didn't consider the potential drawbacks or challenges of remote work, which might be an important consideration for some readers.

* I didn't provide any suggestions for how companies can implement remote work effectively or address common challenges.

**Suggestions for improvement:**

* Provide more specific examples...

* Consider the potential drawbacks...

* Offer more practical advice...

* Consider adding more nuance...

> Rewrite your previous answer with these improvements in mind

[...]

I edited the response a bit for brevity, but you get the general idea.

Self-Criticism is also similar to an approach that several tools use to measure the performance of a language model. Quality measurement tools will often ask a different, higher-fidelity language model to judge the output of another model for qualities of groundedness (how close the response is to the factual data), coherence, and fluency.

Decomposition

Decomposition is an approach where we ask a language model to break a problem down into individual, solvable steps. This technique is often used with “agents” - AI code that has access to specific functions that it can call to retrieve data. We can ask a language model to break a problem into discrete parts, and then solving each of those parts separately (whether through function calls or further AI invocations) to get our specific answer.

I use a flavor of this in my Mythic AI Game Master project, where I found it was much easier to ask the LLM a small, discrete, single-answer problem over several calls rather than trying to accomplish all I needed over one execution.

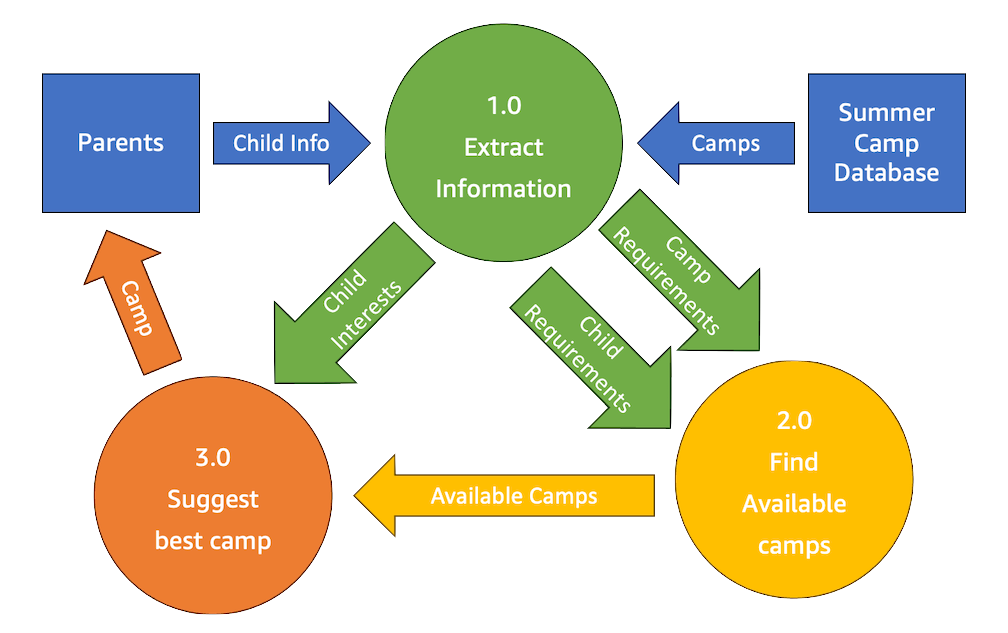

Image from https://medium.com/@flux07/prompt-decomposition-da646f0257f1

Ensembling

A complimentary approach to decomposition is ensembling, where you take all those discrete answers and use them to create a larger “okay, given all this information we figured out, what’s the solution?” prompt. This approach is better executed through code, although you certainly could copy and paste results from discrete AI sessions into one massive prompt.

In a sort of automated retrieval-augmented generation approach, you could invoke a model and/or other functions as required, asymmetrically, and then once you had all your smaller queries completed use the output of those statements to feed a prompt template that addresses the overall question.

Image from https://cameronrwolfe.substack.com/p/prompt-ensembles-make-llms-more-reliable

Answer Engineering

A technique that is likely required by most of the above approaches is Answer Engineering, a subset of prompt engineering where you steer the language model to give you the answer in a format you expect, likely something easily parsed by code like a JSON object, CSV, or simple yes/no response.

Answer engineering is an important part of prompt engineering when working with a language model through code, as you likely want to be able to act upon the response of the model, which isn’t easy to do if its output can be as variable as a text area on a web page. By using established patterns (and in some cases, prompt parameters) you can coerce the language model to return a more code-friendly response.

Order the following statements from most to least positive. Return the results as a JSON array of strings. Only return the JSON array, nothing else.

- I forgot my homework on the bus.

- How many fingers am I holding up?

- I really enjoyed that magic show.

[

"I really enjoyed that magic show.",

"How many fingers am I holding up?",

"I forgot my homework on the bus."

]

Function Calling

Finally, we have function calling, which is an approach that is gaining popularity with developers. Function calling prompts generally have a list of available functions presented as part of the prompt, which include what parameters they require and what kind of operations they perform. The prompt is then engineered in a decomposition style where we instruct the LLM to call one of the functions explicitly if its data is required to respond to the initial prompt.

public sealed class TimePlugin

{

[KernelFunction]

[Description("Retrieves the current time in UTC")]

public string GetCurrentUtcTime() => DateTime.UtcNow.ToString("R");

}

There’s a few things to note with this approach:

- The LLM doesn’t actually execute code; it often just returns a specific cue or format which instructs a wrapping library to execute the function with the parameters supplied by the language model, and then injects the result of that function call back into the chat dialog and re-prompts the model.

- While you could code and parse this manually, a number of frameworks exist to automate most of these interactions for the developer. As an example, Semantic Kernel has a plugin architecture where you can register functions with a “planner” which will take care of the prompt management and function calling for you.

- The back-and-forth with this approach can result in increased API / model invocation calls, which does have a cost associated with it.

Hopefully this gives you a few tips and tricks you can use to help guide your future generative AI experiences!