Multi-Agent Interactions Without Group Chat

Last week I worked on a side project inspired by some whitepapers I read on using Generative AI to play social games. I thought “Hey, why don’t I do something similar with the social RPG ‘Alice is Missing’?” I soon ran into some limitations with the AgentGroupChat module that represents the multi-agent chat workflow that is currently in beta within Semantic Kernel that I’d like to discuss.

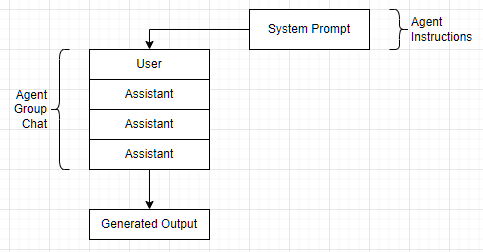

As discussed in a previous post, the AgentGroupChat flow exists to support multi-agent scenarios; features where you have multiple generative AI agents in dialog with one another. This approach is a relatively new method of performing a “self-reflecting” type of prompt engineering, where you ask another model to critique a generated response in an attempt to improve the quality. Microsoft shipped an example of how this works in their Getting Started with Agents Semantic Kernel demo, showing one agent cued as a “copywriter” and another as a “director”, both with their own particular slants and biases about the subject matter.

This is all well and good, and works pretty well for the scenario modeled in the feature - throwing a single user request into an arena of agents and letting them duke it out until they all come to a consensus. However, in a more complicated scenario, such as one where each agent is filling in for a player in a conversational game like Alice is Missing, there’s a few limitations.

AgentGroupChat is closed

One of the first roadblocks I stumbled into was the fact that AgentGroupChat, the “chat history” object that is used to orchestrate all the agent interactions, is quite limited. As a programmer, you’re not allowed to add any system prompts to this object, which can severely limit the applicability of the chat history. What I was trying to do was give ‘global’ instructions to all agents in the chat - for example, telling all of the registered agents that they had to limit their replies to a certain size. I quickly ran into a runtime exception stating that system prompts weren’t allowed.

In my last gamification attempt I found that being able to inject system prompts was a very helpful way of focusing the generative AI to pay attention to the task at hand despite the past history, so having this ability taken away is fairly limiting. That said, I think I understand why they removed this feature. Each agent in the multi-agent set up has its own set of instructions, or basically its own system prompt, that the AgentGroupChat module uses to steer its agent’s behaviour. Using this it can more or less inject its own system prompt into the beginning of the chat history (or possibly the end?), and have that one agent behave the way it was defined.

Once you start, you can’t stop

Another thing I found working with AgentGroupChat is that the interaction is treated as a “continue until finished” type of interaction, where the programmer is meant to set up the conditions of each agent and then let the interaction between them run until completion (completion being defined by your Termination Strategy). It’s actually quite cumbersome to create a flow where you only get a response from one agent within the group, or even one from each agent. The best way I could find was to effectively watch and wait for a certain number of returned responses from the agent group chat.

As someone who’s used to using GetChatMessageContentAsync for each individual message this experience was a bit jarring. Instead, what AgentGroupChat provides you is an async enumerable that you iterate through as the agents generate their responses.

await foreach(var message in groupChat.InvokeAsync(cancellationToken))

{

//logic to process the next message in the chat

}

This will continue until the TerminationStrategy you specify in the AgentGroupChat constructor tells it to stop, unless you also pass a cancellation token into the InvokeAsync method that you can use to force it to stop. In either case it’s a bit awkward when you only want a specific number of messages returned.

Note: Thanks to Chris Rickman who reached out on LinkedIn to point out that an overload of the

InvokeAsyncmethod, the one that takes anChatCompletionAgent, will only return one response and stop. It’s still a bit confusing since it has the sameIAsyncEnumerablereturn type, but at least you don’t have to mess with cancellation tokens.

How do we get around it?

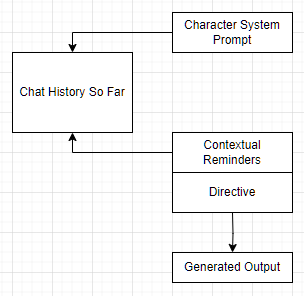

The approach I took when coding my ‘Alice is Missing’ simulator where there is up to 5 other generative AI agents contributing to the chat, each with their own goals and personalities, wasn’t that far off of what (I assume) the Semantic Kernel library was doing behind the scenes. Similar to the Mythic AI GM project, I pass around a ChatHistory object and manipulate it to serve the specific purpose I’m looking for, and manually call GetChatMessageContentAsync for each “persona” when I need a response.

I wrapped each persona up in a Character class that has a specific method, Respond. Respond takes in a ChatHistory, representing the interactions had by each character and the player in the game so far. From there, we create a private copy of the ChatHistory and modify it, injecting the system prompt for this specific character as well as other directives to attune their response.

As you can see it’s somewhat similar to what AgentGroupChat does behind the scenes, but affords us a lot more control. For this particular game, the character system prompt consists of the character’s name, relationship to Alice, personality, background, secret information, and specific drive, while the contextual reminders are notes about any potential relationships with other characters that they’re responding to as well as a reminder of their drive and personality.

I found I had to repeat the personality and drive points as the chat history grew, just to ensure they remain relevant aspects of the generative AI’s output. It’s been researched how generative AI models will often give more weight to the beginning and end of the context, likely an artifact of reading so many papers and paragraphs where the thesis and conclusion naturally occurred in those places.

Here’s an example of what a fully reconstructed prompt may look like:

//character system prompt

"You are a player in a role playing game. Your name is Julia. You are 19 years old.

You are Alice's secret girlfriend. When asked about your relationship to Alice, don't mention that you're her girlfriend.

Your personality can be described as Crazy and Responsible.

Your background: [...]

There's a secret only you know: [...]. Try not to tell anyone else.

Any time controversial or negative things are brought up about Alice, leap to her defense unconditionally. //drive

Prefix all your responses with Julia:"

//game context prompt

"[...] A few days ago, Alice stopped responding. You haven't heard from her since."

//character backgrounds

[...]

//location information

[...]

//suspect information

[...]

//game start system prompt

"The game is now starting.

You should respond in short sentences as though writing a text message, no more than 160 characters at a time.

Two players can't be in the same place at the same time, and you cannot meet in person.

The locations you can search include the Silent Falls train station, Kalisto Rivers State Park, the Dripping Dagger nightclub, the old barn on Cambridge Street, or the lighthouse on the Howling Sea cliffs.

Only one player can search a location at a time.

You won't find Alice unless you're explicitly told you found her."

//chat history start

"Charlie: Hey! Sorry for the big group text, but I just got into town for winter break at my dad's and haven't been able to get ahold of Alice. Just wondering if any of you have spoken to her?"

"Charlie: Maybe we could all meet up and brainstorm where she might be? I mean, it's less stressful than looking for her alone, right? Plus, we can throw in some snacks! 🍕"

"Jack: I appreciate you reaching out, Charlie. But honestly, I'm worried sick about Alice. I think we need to split up and check some specific places. "

"Charlie: Totally understand, Jack! 😟 Just remember, it’s important to keep a level head. How about I check out the old barn? I’ll bring snacks as backup! 🍪"

"Dakota: Let's not forget how important it is to stay cautious. I'll head to the lighthouse. I have a feeling it could lead to something important regarding Alice. 🕵️♀️"

//contextual reminders

"Your personality can be described as Crazy and Responsible. Any time controversial or negative things are brought up about Alice, leap to her defense unconditionally."

"Remember your relationship with Dakota when you respond: '[...]'"

As you can imagine this prompt gets longer and longer as the game progresses, but with the new gpt-4o-mini model I’ve been able to process an entire 45 minute game only using about $0.85 of credits!

This post has already got a bit long so I’ll finish up tomorrow with a more pointed description of how we generated the characters and how they interact.